“It turns out that the request for chatbots to give short answers can lead to an increase in hallucinations.”, – WRITE: www.unian.ua

It turns out that the request for chatbots to give short answers can lead to an increase in hallucinations.

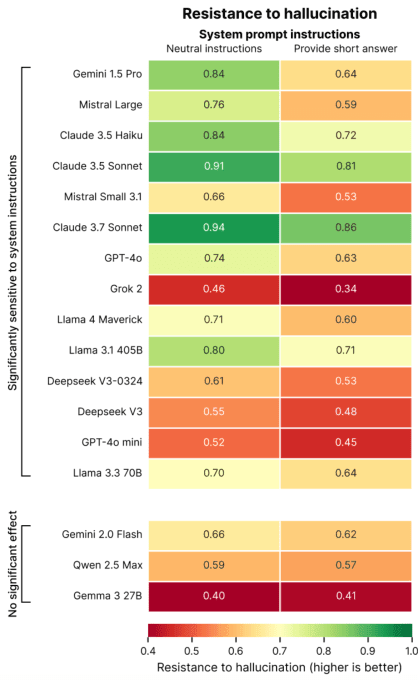

Chatbots are more likely to lie if you ask them to be short – research / photo TechcrunchThe Giskard research, which develops a complete benchmark for AI models, has shown that if you ask for a chatbot to be short, it may have more hallucinations than usual.

Chatbots are more likely to lie if you ask them to be short – research / photo TechcrunchThe Giskard research, which develops a complete benchmark for AI models, has shown that if you ask for a chatbot to be short, it may have more hallucinations than usual.

The blog report states that this is especially true for leading models, such as GPT-4O, Mistral Large and Claude 3.7 Sonnet, which are difficult to maintain accuracy when asked for a short answer.

Scientists suggest that short answers do not give neurotrons to “space” for clarification, denials and clarification, which is critical for tasks related to actual authenticity. In other words, strong denials require longer explanations.

“When the models are forced to be short, they invariably choose brevity, not accuracy,” researchers write.

Giskard research also contains other interesting discoveries, for example, that AI models are more often left without refuting disputed statements if they are confident. This puts developers before choosing between convenience for the user and maintaining accuracy.

Source: GiskardHallucinations, or providing false or fictitious answers, remain an insoluble problem in the AI. Moreover, even the most pronounced models of reasoning, such as O3 of Openai, demonstrate the higher frequency of hallucinations compared to their predecessors.

Source: GiskardHallucinations, or providing false or fictitious answers, remain an insoluble problem in the AI. Moreover, even the most pronounced models of reasoning, such as O3 of Openai, demonstrate the higher frequency of hallucinations compared to their predecessors.

The NVIDIA head believes that it will take at least a few years to solve problems with “Hallucinations”. People should not doubt the answers of the AI, wondering “hallucinations or not”, “it is reasonable or not.”

As UNIAN has already written, more than 52 thousand ITHECHERS have been released worldwide since the beginning of 2025. The reason is the development of artificial intelligence, which can effectively perform routine programming tasks, including code writing and testing.

You may also be interested in news:

- Microsoft Director acknowledged that his company is writing a code using neurotrome

- Creator Chatgpt launched cryptocurrency that you can earn for eye scanning

- Microsoft will soon release AI that can change your Windows settings